Recruit the right talent today, faster.

Get a steady stream of great candidates and help from professional recruiters any time you need it. Our AI Software and community of OnDemand recruiters deliver top talent to employers at any scale, from startups to Fortune 100 companies.

Utilize the Power of Data and AI

Sit back and relax - our AI software automatically campaigns to a network of over 150 million talent profiles.

Easily review qualified and curated candidates in one simple dashboard. Spend less time searching and screening and more time speaking to the right people.

Get started

Get the help you need with hiring

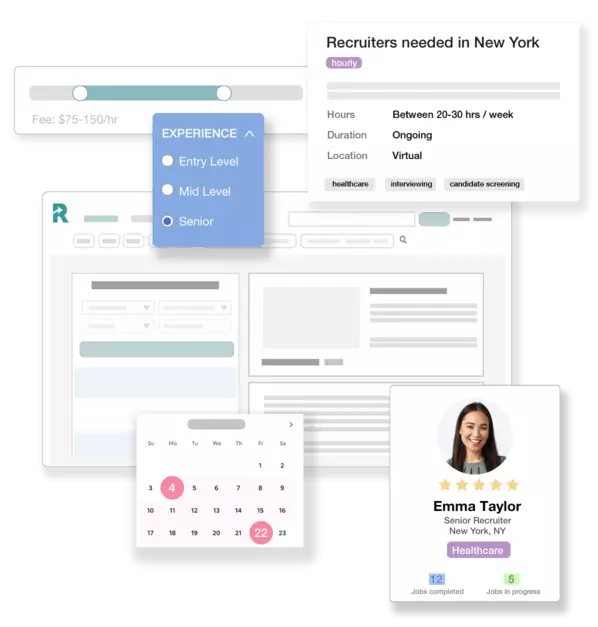

Have a specialized role you're trying to fill? Our Recruiters OnDemand make it easy for companies at any scale to expand their own in-house hiring team with our network of experienced HR professionals.

Choose your preferred recruiter, or team of recruiters, to assist with any part of your talent acquisition effort. Scale up or down flexibly with your hiring demands and reduce your overhead.

Learn moreWhether you are hiring your first employee or your one thousandth, we have your talent pipeline covered.

-

Hire a Recruiter

We have a reach of over 850,000 recruiters and HR professionals across our social media touchpoints. We run the largest network of recruiters nationwide, with 30,000 members on our platform. Looking to hire a recruiter or HR professional on a full-time or part-time basis? We can help!

Start -

Media

Instantly reach the best candidates for editorial, publishing, content creation, content marketing, social marketing, video, and other creative roles. We are the leading source of full-time and freelance hires for top publishers, content marketing teams, news organizations, streaming services, video teams, and more.

Start -

Finance

With more than 700,000 members, we have one of the largest talent pools in the financial sector. Access tailored executive search services and vetted candidate matches. We help investment banks, hedge funds, private equity, and venture capital firms get matched with top financial service professionals.

Start

-

Top Tech Company to Watch

![]()

-

Top 35 Most Influential Career Sites

-

9 Best Websites for Finding Talent